import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from io import StringIO

# Retina mode

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

from latexify import latexify, format_axesDecision Trees Real Input and Discrete Output

ML

dataset = """Day,Temperature,PlayTennis

D1,40,No

D2,48,No

D3,60,Yes

D4,72,Yes

D5,80,Yes

D6,90,No"""

f = StringIO(dataset)

df = pd.read_csv(f, sep=",")df| Day | Temperature | PlayTennis | |

|---|---|---|---|

| 0 | D1 | 40 | No |

| 1 | D2 | 48 | No |

| 2 | D3 | 60 | Yes |

| 3 | D4 | 72 | Yes |

| 4 | D5 | 80 | Yes |

| 5 | D6 | 90 | No |

latexify(columns=2)from sklearn.tree import DecisionTreeClassifier

from sklearn.tree import export_graphviz

import graphviz

dt = DecisionTreeClassifier(criterion='entropy', max_depth=1)

X = df[['Temperature']]

y = df['PlayTennis']

dt.fit(X, y)DecisionTreeClassifier(criterion='entropy', max_depth=1)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

DecisionTreeClassifier(criterion='entropy', max_depth=1)

# Function to strore the decision tree's graphviz representation as pdf

def save_decision_tree_as_pdf(dt, feature_names, class_names, filename):

dot_data = export_graphviz(dt, out_file=None, feature_names=feature_names, class_names=class_names, filled=True, rounded=True, special_characters=True)

graph = graphviz.Source(dot_data)

graph.render(filename, format='pdf')

save_decision_tree_as_pdf(dt, ['Temperature'], ['No', 'Yes'], '../figures/decision-trees/real-ip-1')dt2 = DecisionTreeClassifier(criterion='entropy', max_depth=2)

dt2.fit(X, y)

save_decision_tree_as_pdf(dt2, ['Temperature'], ['No', 'Yes'], '../figures/decision-trees/real-ip-2')Heavily borrowed and inspired from https://jakevdp.github.io/PythonDataScienceHandbook/05.08-random-forests.html

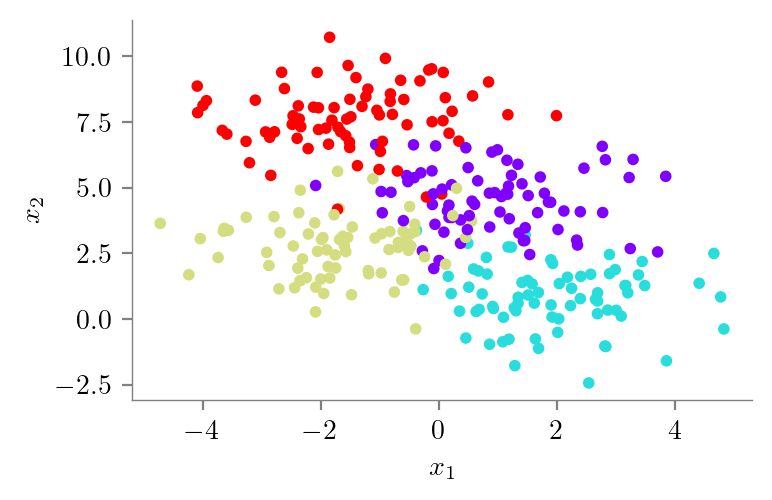

from sklearn.datasets import make_blobs

X, y = make_blobs(n_samples=300, centers=4,

random_state=0, cluster_std=1.2)

plt.scatter(X[:, 0], X[:, 1], c=y, s=10, cmap='rainbow')

format_axes(plt.gca())

plt.xlabel(r"$x_1$")

plt.ylabel(r"$x_2$")Text(0, 0.5, '$x_2$')

from sklearn.tree import DecisionTreeClassifierdef visualize_tree(depth, X, y, ax=None, cmap='rainbow'):

model = DecisionTreeClassifier(max_depth=depth)

ax = ax or plt.gca()

print(model, depth)

# Plot the training points

ax.scatter(X[:, 0], X[:, 1], c=y, s=10, cmap=cmap,

clim=(y.min(), y.max()), zorder=3)

ax.axis('tight')

format_axes(plt.gca())

plt.xlabel(r"$x_1$")

plt.ylabel(r"$x_2$")

xlim = ax.get_xlim()

ylim = ax.get_ylim()

# fit the estimator

model.fit(X, y)

xx, yy = np.meshgrid(np.linspace(*xlim, num=200),

np.linspace(*ylim, num=200))

Z = model.predict(np.c_[xx.ravel(), yy.ravel()]).reshape(xx.shape)

# Create a color plot with the results

n_classes = len(np.unique(y))

contours = ax.contourf(xx, yy, Z, alpha=0.2,

levels=np.arange(n_classes + 1) - 0.5,

cmap=cmap, clim=(y.min(), y.max()),

zorder=1)

ax.set(xlim=xlim, ylim=ylim)

plt.tight_layout()

plt.savefig(f"../figures/decision-trees/dt-{depth}.pdf", bbox_inches="tight")

plt.clf()for depth in range(1, 11):

visualize_tree(depth, X, y)DecisionTreeClassifier(max_depth=1) 1DecisionTreeClassifier(max_depth=2) 2DecisionTreeClassifier(max_depth=3) 3DecisionTreeClassifier(max_depth=4) 4DecisionTreeClassifier(max_depth=5) 5DecisionTreeClassifier(max_depth=6) 6DecisionTreeClassifier(max_depth=7) 7DecisionTreeClassifier(max_depth=8) 8DecisionTreeClassifier(max_depth=9) 9DecisionTreeClassifier(max_depth=10) 10<Figure size 400x246.914 with 0 Axes>