import matplotlib.pyplot as plt

import numpy as np

import torch

%config InlineBackend.figure_format = 'retina'

%matplotlib inlineTaylor’s Series

ML

Tutorial

f = lambda x, y: x**2 + y**2

def f(x, y):

return x**2 + y**2

f_dash = torch.func.grad(f, argnums=(0, 1))f_dash<function __main__.f(x, y)>def f2(argument):

x, y= argument

return x**2 + y**2torch.func.grad(f2)(torch.tensor([1.0, 1.0]))tensor([2., 2.])f_dash(torch.tensor(1.0), torch.tensor(1.0))(tensor(2.), tensor(2.))x = torch.tensor(1.0, requires_grad=True)

y = torch.tensor(1.0, requires_grad=True)

print("Before backward: ", x.grad, y.grad)

z = f(x, y)

z.backward()

print("After backward: ", x.grad, y.grad)Before backward: None None

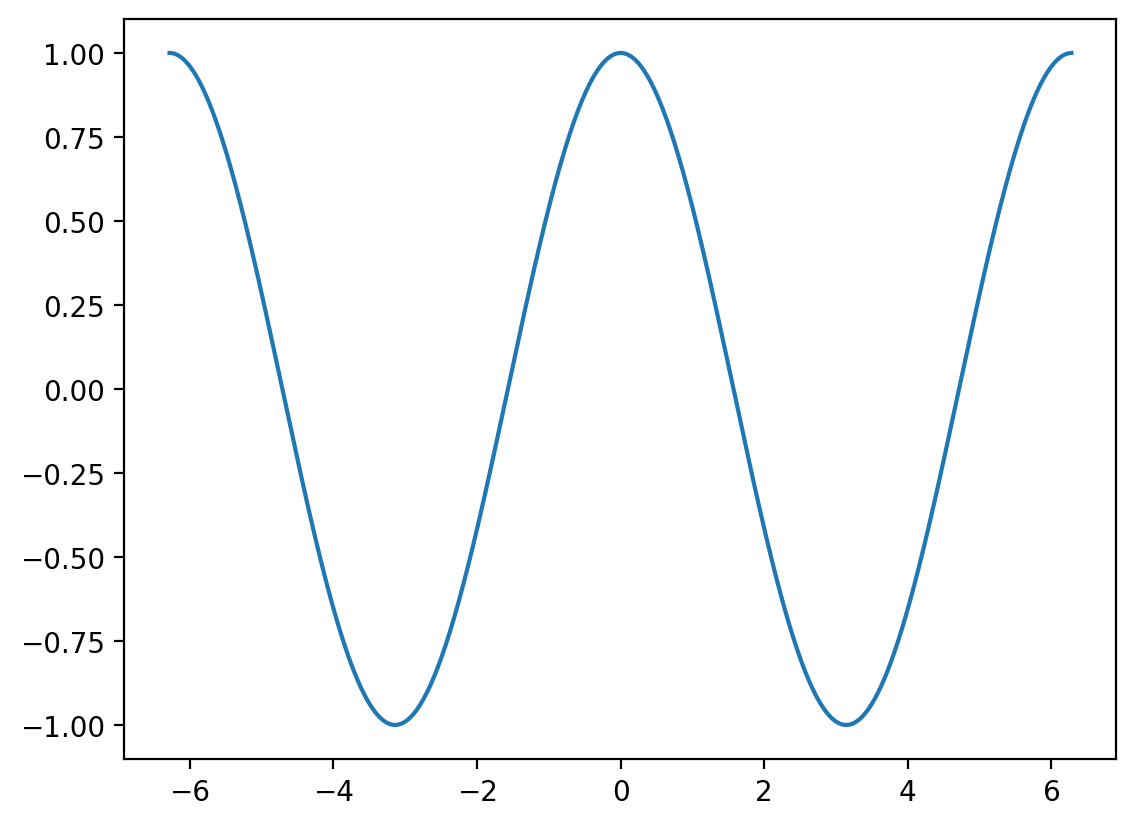

After backward: tensor(2.) tensor(2.)f = lambda x: torch.cos(x)x_range = torch.arange(-2*np.pi, 2*np.pi, 0.01)

y_range = f(x_range)

plt.plot(x_range, y_range)

def nth_order_appx(f, n, x0=0.0, verbose=False):

x0 = torch.tensor(x0)

derivs = {1:torch.func.grad(f)}

vals = {0:f(x0), 1:derivs[1](x0)}

if verbose:

print("f(x0) = {}".format(vals[0]))

print("f'(x0) = {}".format(vals[1]))

for i in range(2, n+1):

derivs[i] = torch.func.grad(derivs[i-1])

vals[i] = derivs[i](x0)

if verbose:

d = "'"*i

print("f{}(x0) = {}".format(d, vals[i]))

def g(x):

x_diff = x - x0

str_rep = "f(x) = f(x0) + "

out = vals[0].repeat(x.shape)

for i in range(1, n+1):

str_rep += f"{vals[i]} * (x-{x0.item()})^{i} / {i}! + "

out += vals[i] * x_diff**i / torch.math.factorial(i)

if verbose:

print("--"*40)

print(str_rep)

return out

return g

f = lambda x: torch.cos(x)

_ = nth_order_appx(f, 1, 0.0, verbose=True)(x_range)f(x0) = 1.0

f'(x0) = -0.0

--------------------------------------------------------------------------------

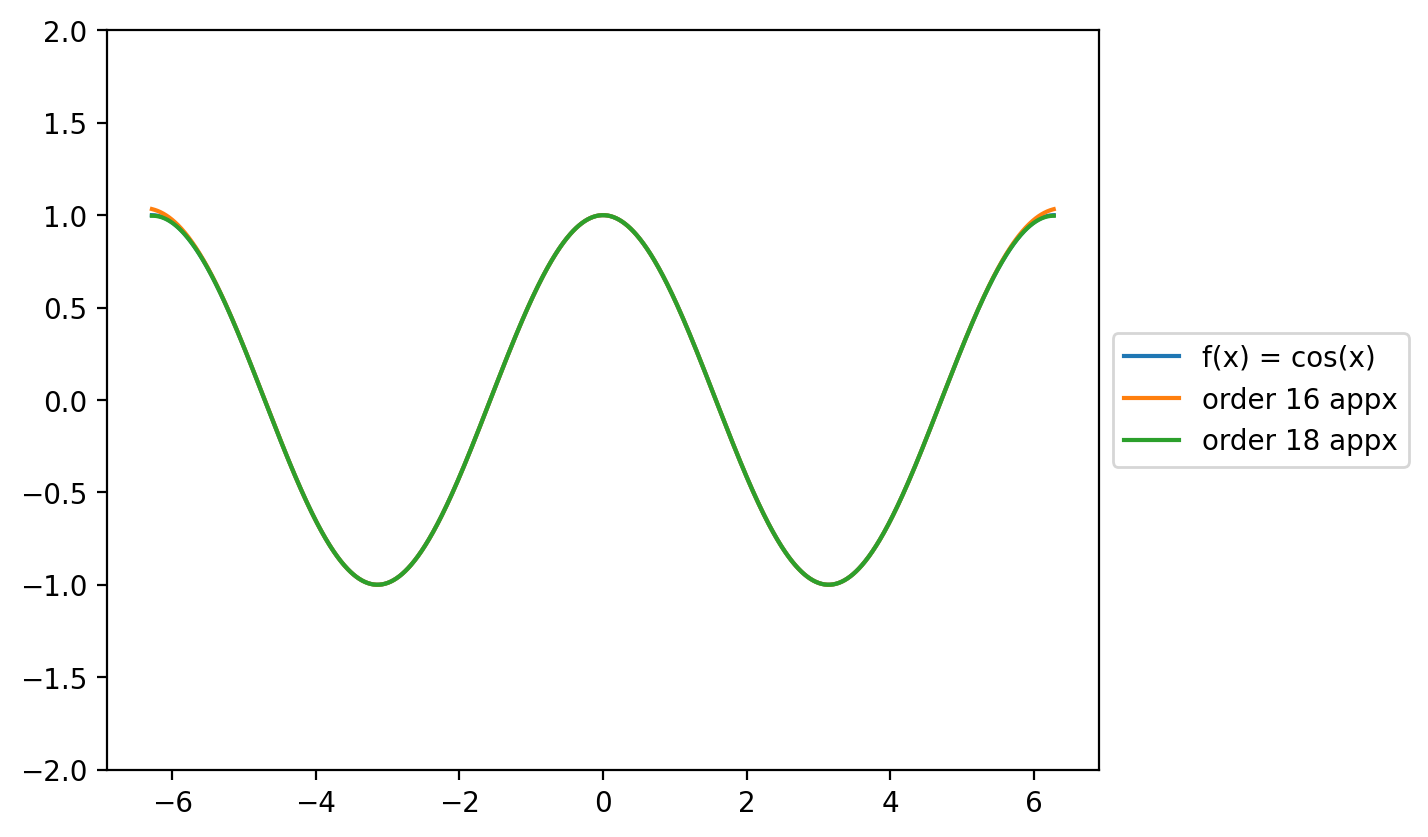

f(x) = f(x0) + -0.0 * (x-0.0)^1 / 1! + plt.plot(x_range, f(x_range), label="f(x) = cos(x)")

for i in range(16, 19, 2):

plt.plot(x_range, nth_order_appx(f, i, 0.0)(x_range), label=f"order {i} appx")

plt.ylim(-2, 2)

# legend outside

plt.legend(loc='center left', bbox_to_anchor=(1, 0.5))

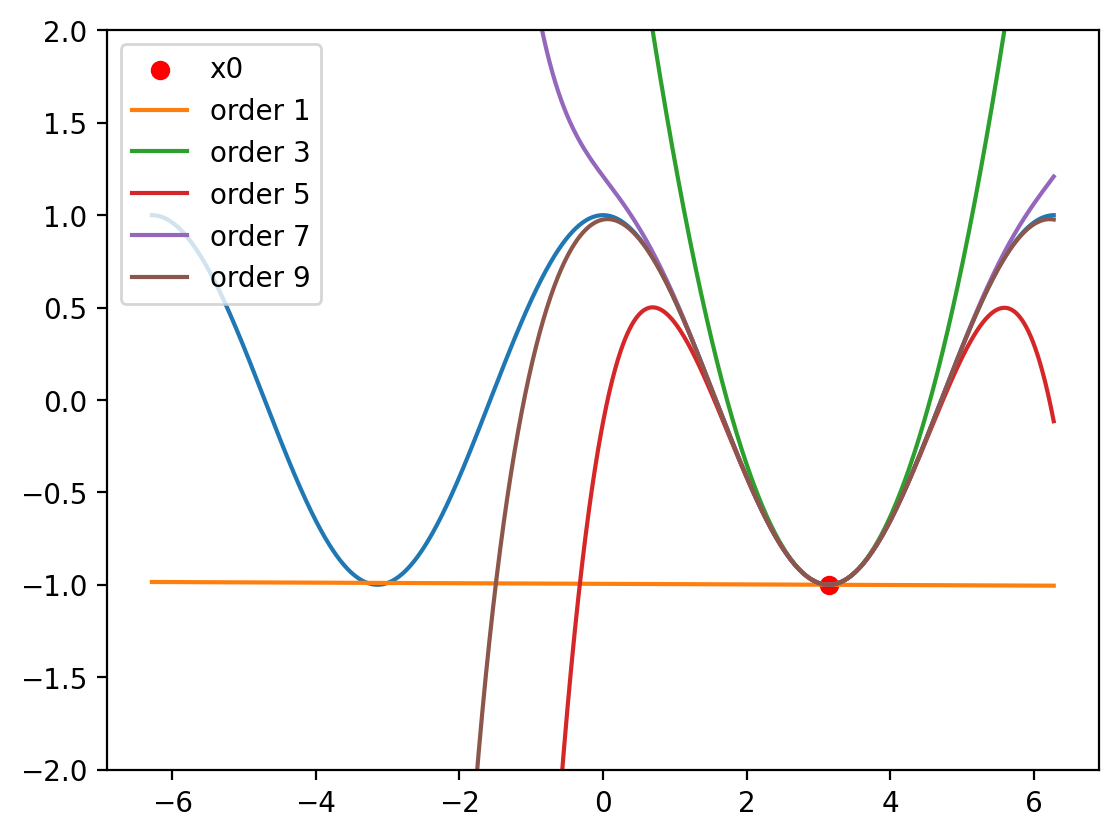

f = lambda x: torch.cos(x)

x0 = 3.14

plt.plot(x_range, f(x_range))

plt.scatter(x0, f(torch.tensor(x0)), c='r', label='x0')

for i in range(1, 11, 2):

plt.plot(x_range, nth_order_appx(f, i, 3.14)(x_range), label=f"order {i}")

plt.ylim(-2, 2)

plt.legend()

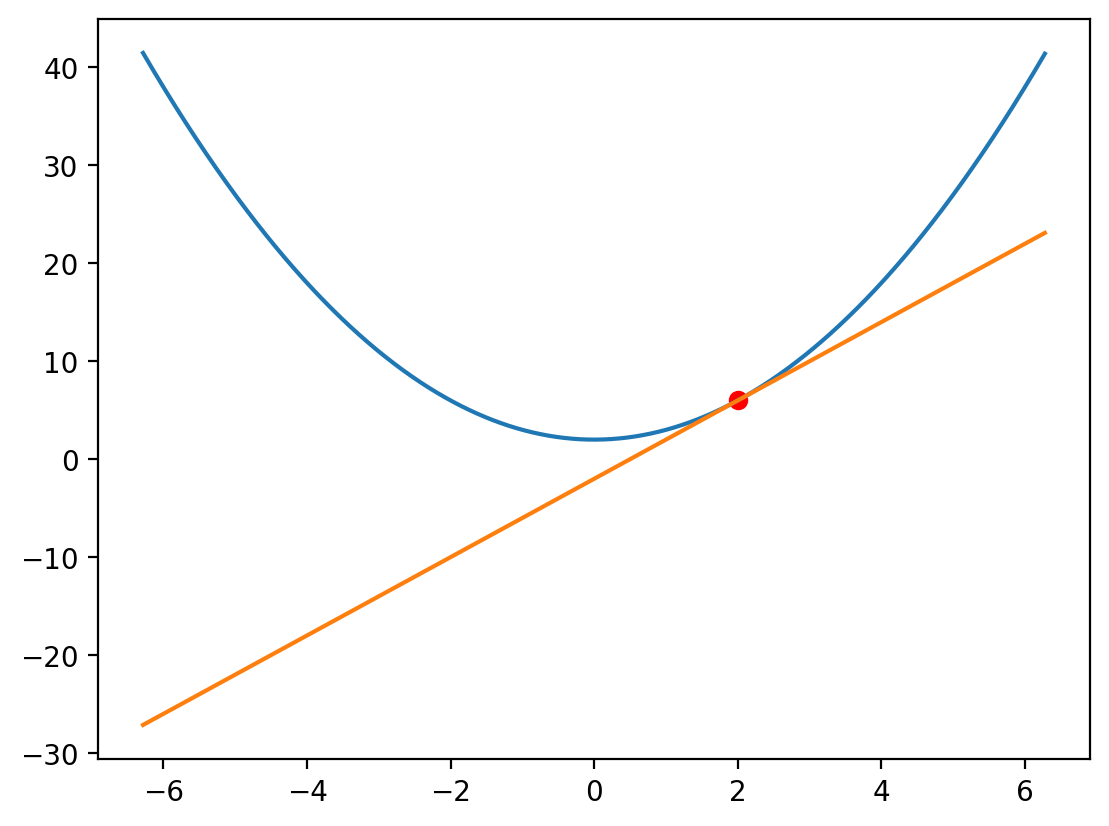

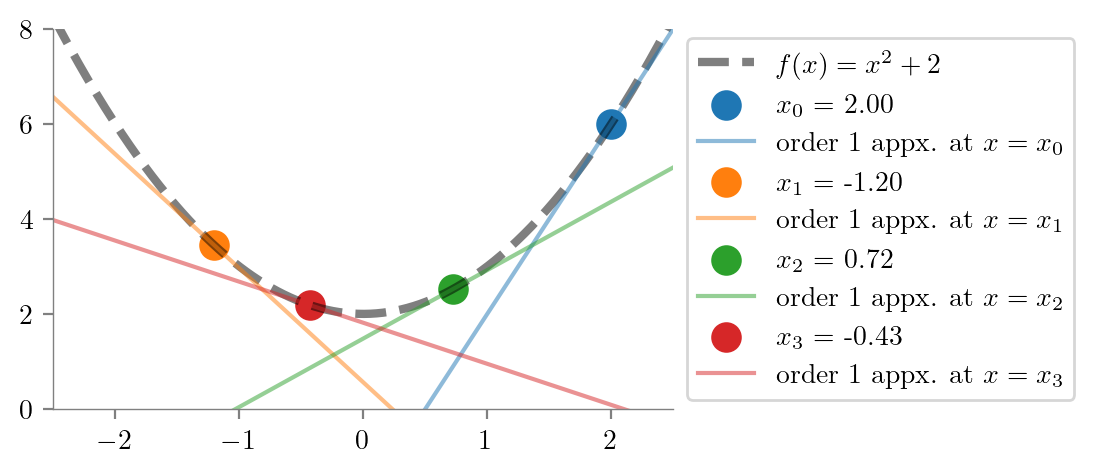

f = lambda x: x**2 + 2

plt.plot(x_range, f(x_range))

x0 = 2.0

plt.scatter(x0, f(torch.tensor(x0)), c='r', label='x0')

plt.plot(x_range, nth_order_appx(f, 1, x0)(x_range), label=f"order 1")

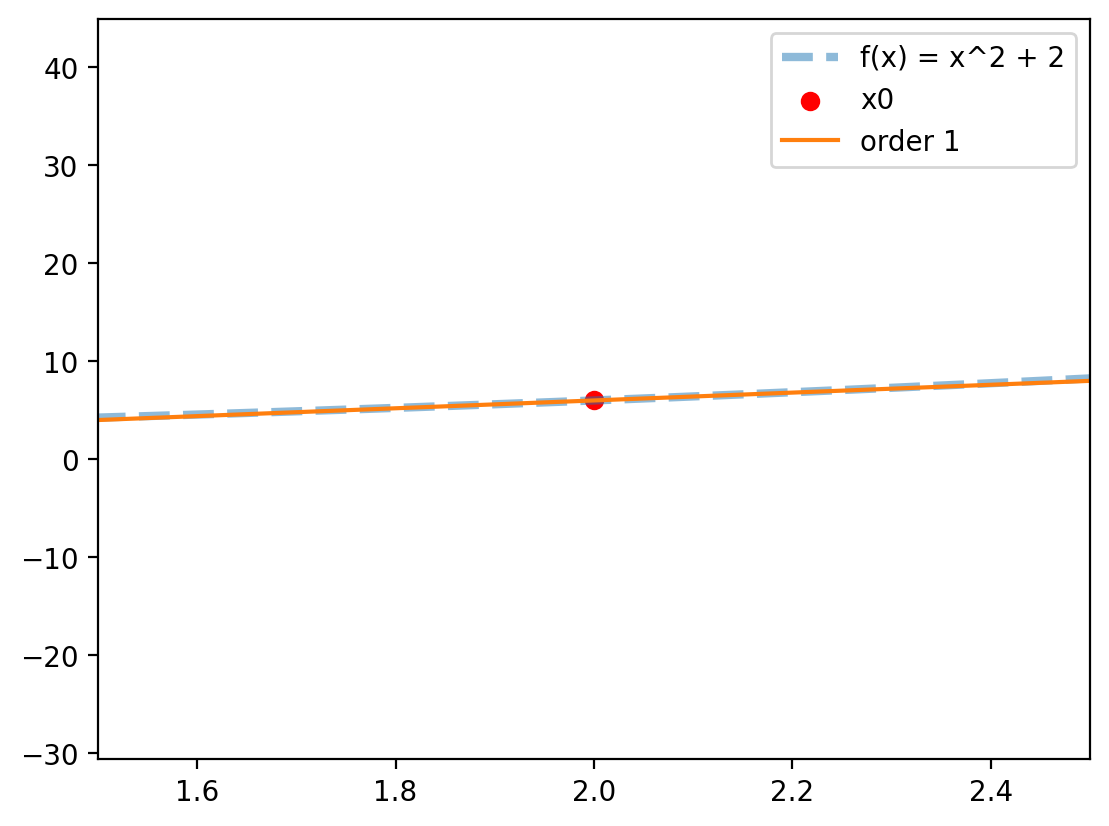

plt.plot(x_range, f(x_range), label="f(x) = x^2 + 2", lw=3, alpha=0.5, ls='--')

plt.scatter(x0, f(torch.tensor(x0)), c='r', label='x0')

plt.plot(x_range, nth_order_appx(f, 1, x0)(x_range), label=f"order 1")

plt.xlim(1.5, 2.5)

plt.legend()

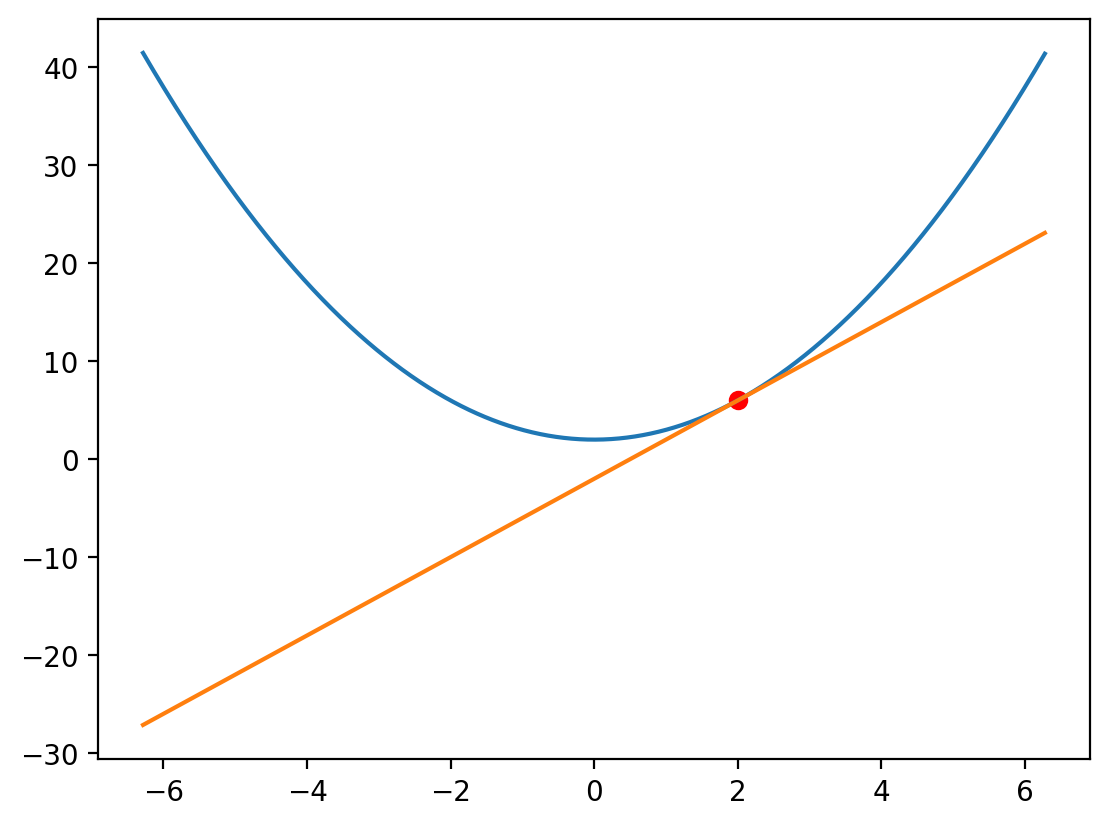

f = lambda x: x**2 + 2

plt.plot(x_range, f(x_range))

x0 = 2.0

plt.scatter(x0, f(torch.tensor(x0)), c='r', label='x0')

plt.plot(x_range, nth_order_appx(f, 1, x0)(x_range), label=f"order 1")

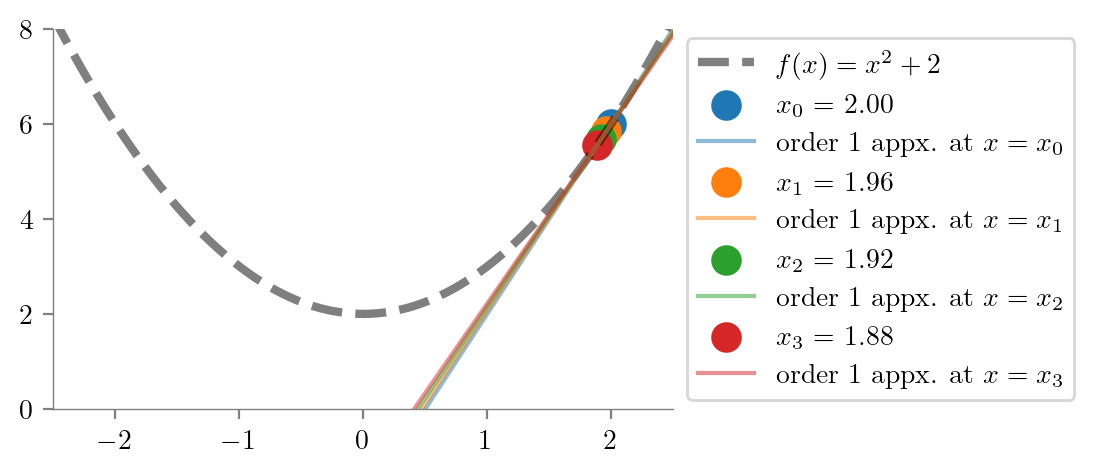

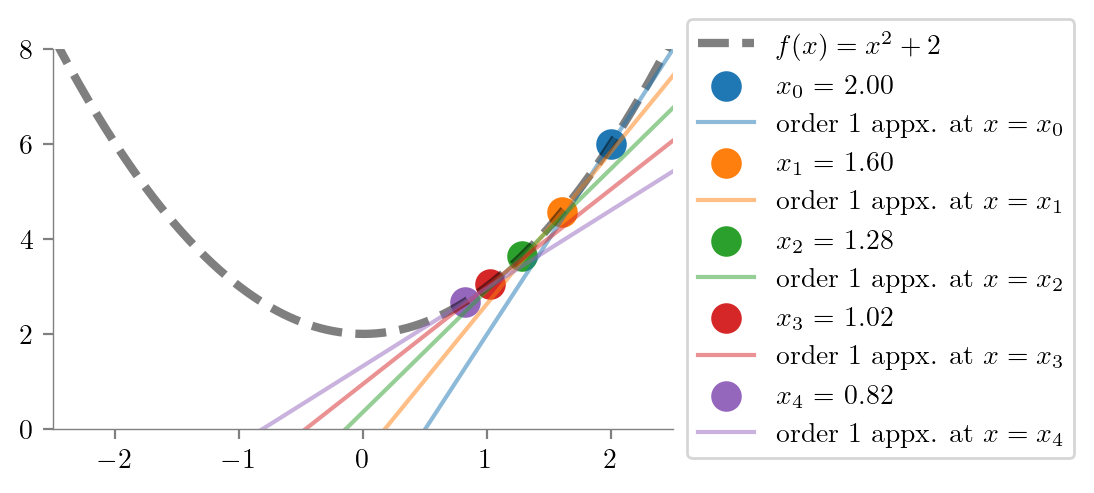

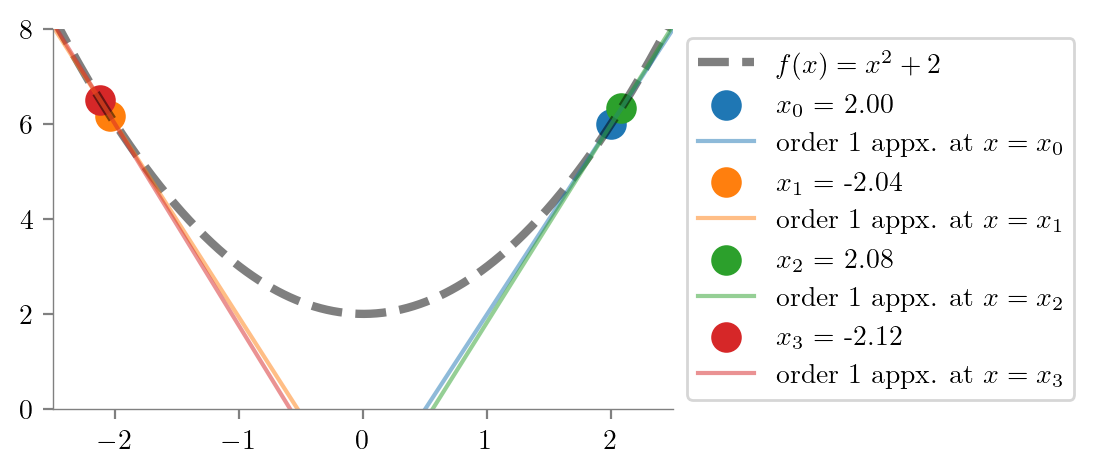

from latexify import latexify, format_axeslatexify(columns=2)def plot_gd(alpha=0.1, iter=3):

x0 = torch.tensor(2.0)

xi = x0

plt.plot(x_range, f(x_range), label=r"$f(x) = x^2 + 2$", lw=3, alpha=0.5, ls='--', color='k')

for i in range(iter):

plt.scatter(xi, f(xi), label=fr'$x_{i}$ = {xi.item():0.2f}', s=100, c=f"C{i}")

with torch.no_grad():

appx = nth_order_appx(f, 1, xi)(x_range)

plt.plot(x_range, appx, label=fr"order 1 appx. at $x=x_{i}$", c=f"C{i}", alpha=0.5)

xi = xi - alpha * torch.func.grad(f)(xi)

plt.xlim(-2.5, 2.5)

plt.ylim(0, 8)

# legend outside plot

plt.legend(loc='center left', bbox_to_anchor=(1, 0.5))

format_axes(plt.gca())plot_gd(alpha=0.1, iter=5)

plt.savefig("../figures/mml/gd-lr-0.1.pdf", bbox_inches="tight")

plot_gd(alpha=0.8, iter=4)

plt.savefig("../figures/mml/gd-lr-0.8.pdf", bbox_inches="tight")

plot_gd(alpha=1.01, iter=4)

plt.savefig("../figures/mml/gd-lr-1.01.pdf", bbox_inches="tight")

plot_gd(alpha=0.01, iter=4)

plt.savefig("../figures/mml/gd-lr-0.01.pdf", bbox_inches="tight")