import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

%config InlineBackend.figure_format = 'retina'Conditioning and Linear Regression

Conditioning and Linear Regression

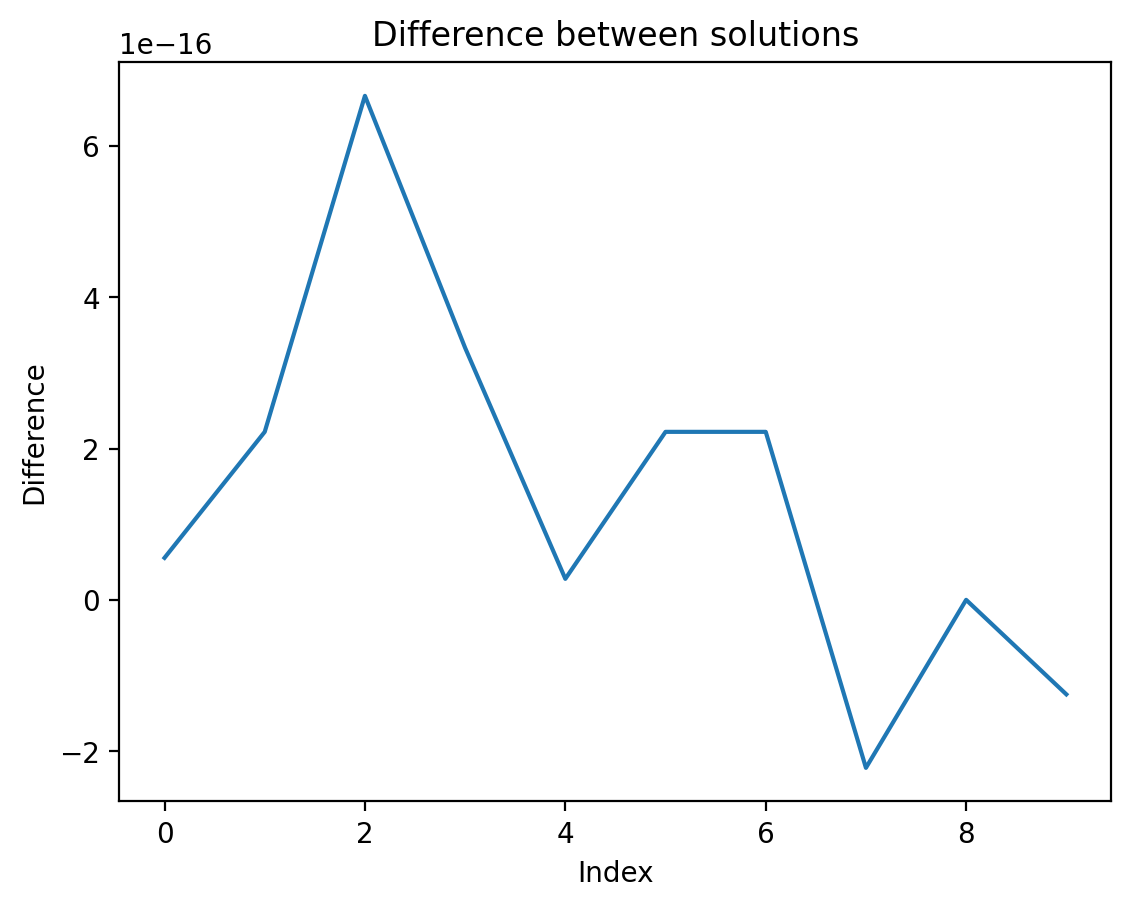

# Showing that np.linalg.solve is better conditioned than np.linalg.inv for linear regression normal equations

# Generate data

n = 100

p = 10

X = np.random.randn(n, p)

theta = np.random.randn(p)

y = X @ theta + np.random.randn(n)

# Solve normal equations

theta_hat = np.linalg.solve(X.T @ X, X.T @ y)

theta_hat_inv = np.linalg.inv(X.T @ X) @ X.T @ y

# Compare the condition numbers

print(np.linalg.cond(X.T @ X))

print(np.linalg.cond(np.linalg.inv(X.T @ X)))

# Plot the difference between the two solutions

plt.plot(theta_hat - theta_hat_inv)

plt.title('Difference between solutions')

plt.xlabel('Index')

plt.ylabel('Difference')

plt.show()

2.980877596192165

2.980877596192165