Kappa Example: Spam Classification

Email: 1 2 3 4 5 6 7 8 9 10

Ann A: S N S S N S N N S S (6 Spam, 4 Not)

Ann B: S N S N N S N S S S (6 Spam, 4 Not)

✓ ✓ ✓ ✗ ✓ ✓ ✓ ✗ ✓ ✓ → 8/10 = 80% agree

| Step | Calculation | Result |

|---|---|---|

| P_observed | 8/10 | 0.80 |

| P_expected | (0.6×0.6) + (0.4×0.4) | 0.52 |

| κ | (0.80 - 0.52) / (1 - 0.52) | 0.58 |

80% sounds good, but κ=0.58 reveals it's only moderately better than chance!

from sklearn.metrics import cohen_kappa_score

kappa = cohen_kappa_score(ann_a, ann_b) # 0.58

Kappa Interpretation Guide

| Kappa | Level | Action |

|---|---|---|

| < 0 | Worse than chance | Check for label swap! |

| 0.0–0.40 | Slight/Fair | Major guideline rewrite |

| 0.41–0.60 | Moderate | Refine guidelines |

| 0.61–0.80 | Substantial | Minor tweaks |

| 0.81–1.0 | Almost Perfect | Production ready! |

Target: κ ≥ 0.8 for production | If κ < 0.6: Fix guidelines before collecting more data!

Beyond Cohen's Kappa

| Scenario | Use This Metric |

|---|---|

| 2 annotators, categorical | Cohen's Kappa |

| 3+ annotators, categorical | Fleiss' Kappa |

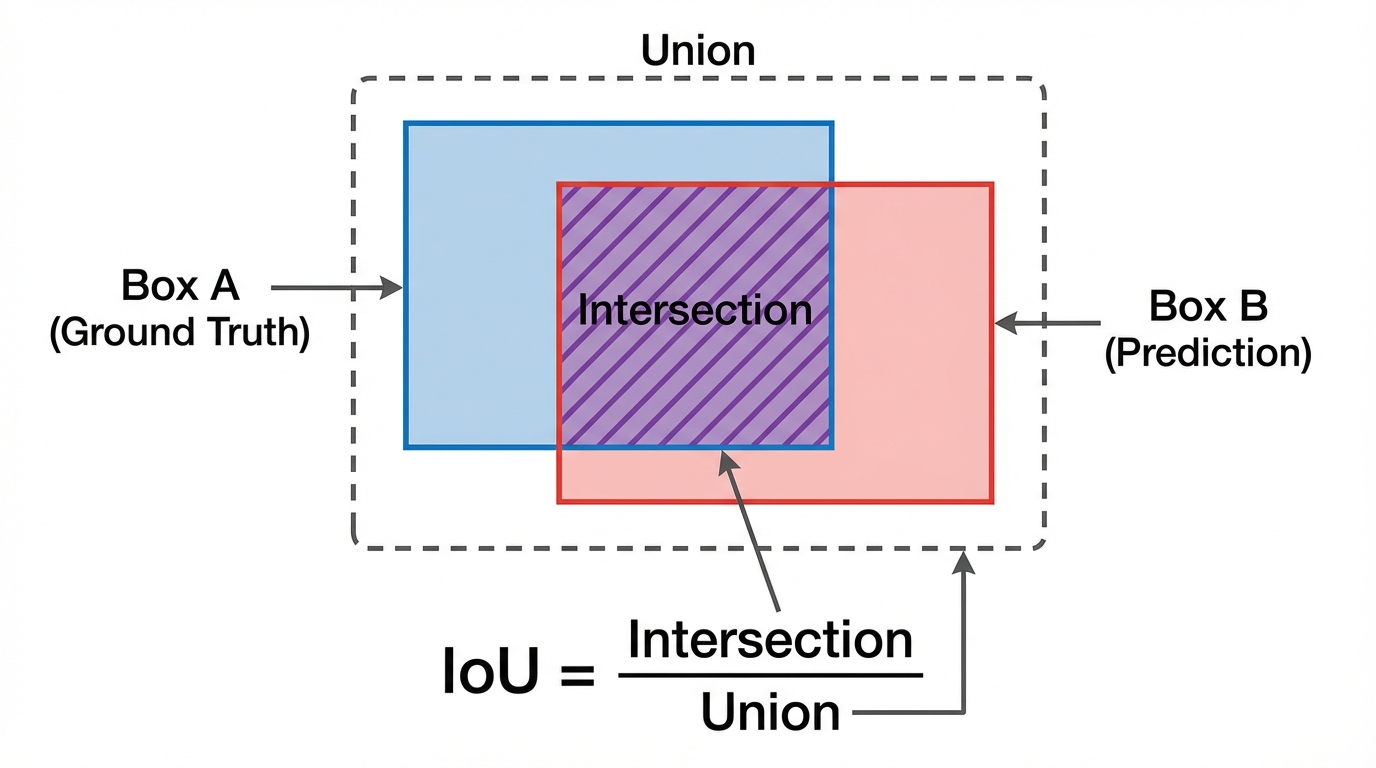

| Bounding boxes / masks | IoU (Intersection over Union) |

| Text spans (NER) | Span F1 |

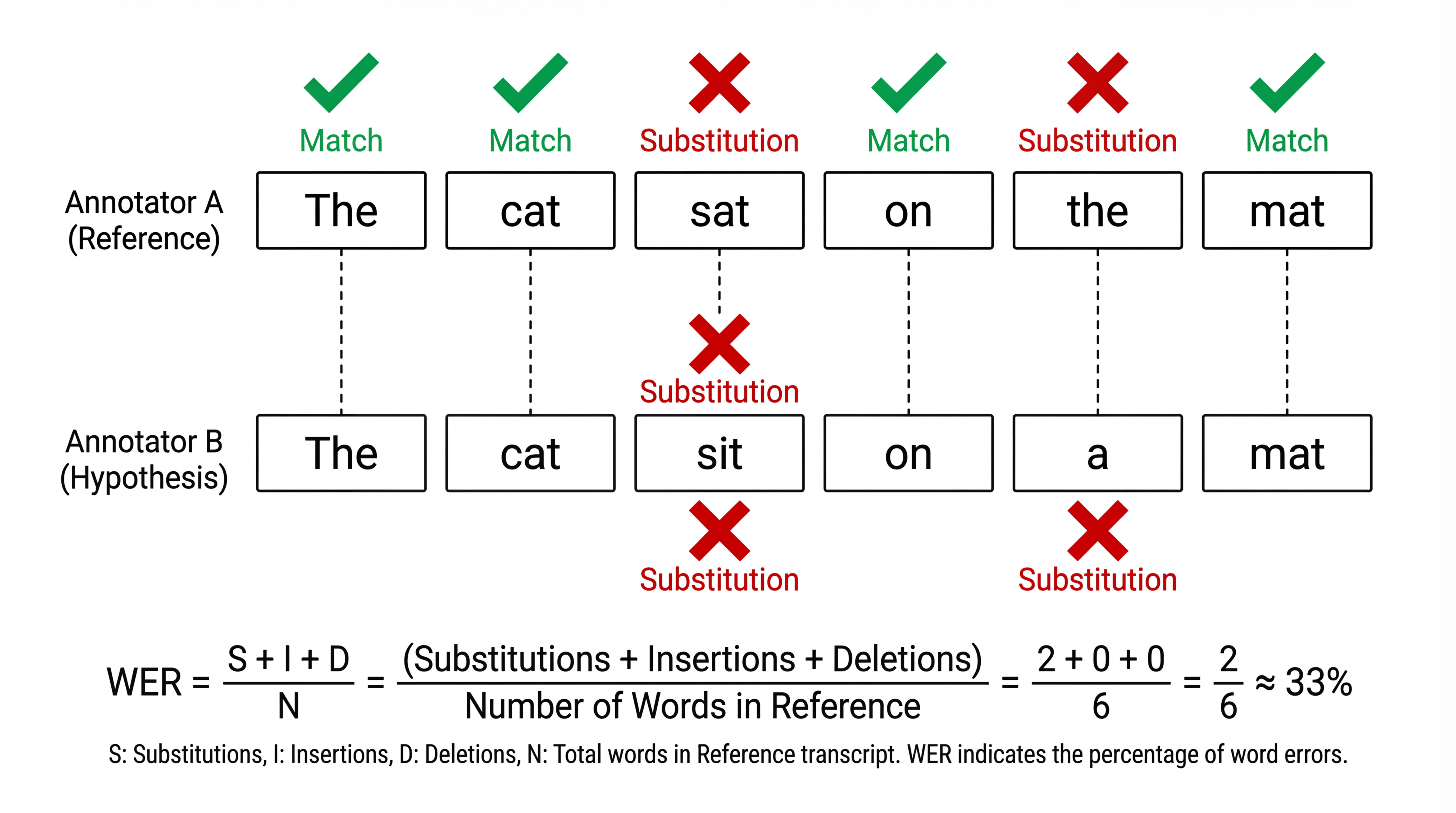

| Transcription | WER (Word Error Rate) |

Same intuition: How much better than chance?

IoU for Spatial Annotations

IoU > 0.5: Generally considered a match | IoU > 0.7: Good agreement

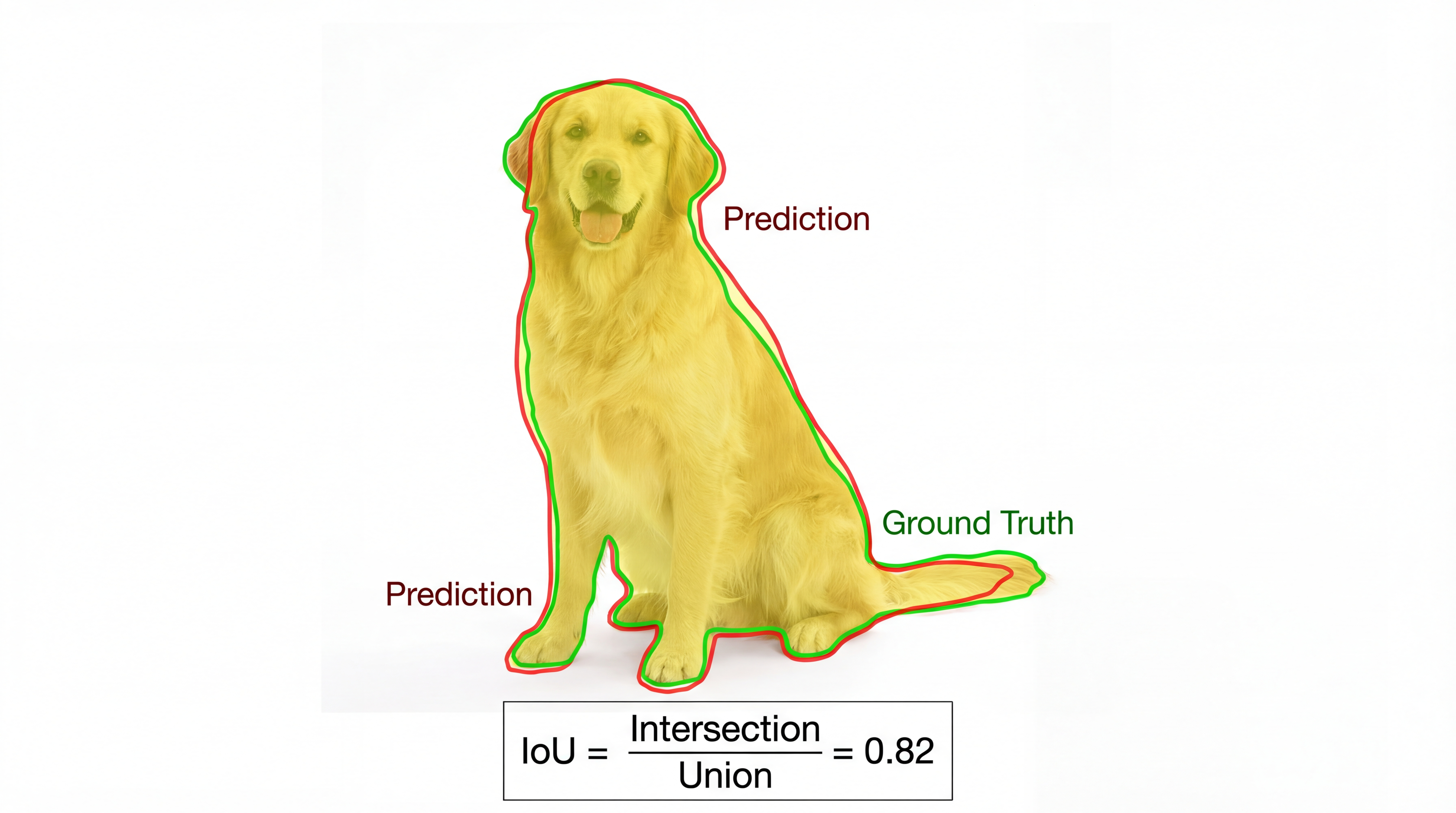

IoU for Segmentation Masks

def segmentation_iou(mask1, mask2):

intersection = np.logical_and(mask1, mask2).sum()

union = np.logical_or(mask1, mask2).sum()

return intersection / union if union > 0 else 0

Also common: Dice = 2×Intersection / (|A| + |B|)

Typical IAA by Task Type

| Task | Metric | Typical Good IAA |

|---|---|---|

| Text Classification | Cohen's Kappa | > 0.8 |

| Sentiment Analysis | Cohen's Kappa | > 0.7 |

| NER | Span F1 | > 0.85 |

| Object Detection | Mean IoU | > 0.7 |

| Segmentation | Mean IoU | > 0.8 |

| Transcription | WER < 5% | Between annotators |

| Emotion Recognition | Cohen's Kappa | > 0.6 (subjective) |

Part 6: Quality Control

Brief overview — details in lab

Quality Control: 6 Pillars

| Pillar | Key Point |

|---|---|

| 1. GUIDELINES | Clear definitions + edge cases |

| 2. TRAINING | Calibration rounds until κ > 0.8 |

| 3. GOLD STANDARD | 10% known-correct items mixed in |

| 4. REDUNDANCY | 2-3 annotators per item |

| 5. MONITORING | Track IAA and accuracy over time |

| 6. ADJUDICATION | Majority vote or expert review |

Workflow: Pilot (50-100 items) → Measure IAA → Fix guidelines → Production

Part 7: Key Takeaways

Interview Questions

Common interview questions on data labeling:

-

"How would you ensure label quality in a large annotation project?"

- Use multiple annotators (redundancy)

- Calculate inter-annotator agreement (Cohen's Kappa)

- Include gold standard questions for monitoring

- Write clear guidelines with edge cases

- Run calibration sessions before production

-

"What is Cohen's Kappa and why use it instead of percent agreement?"

- Kappa accounts for chance agreement

- Two random guessers on binary task agree 50% by chance

- Kappa measures how much better than chance your agreement is

- Target: Kappa >= 0.8 for production-ready labels

Key Takeaways

-

Labels are the bottleneck - 80% of AI project time

-

Different tasks, different challenges - NER != Classification != Segmentation

-

Start with Label Studio - Free, flexible, supports all modalities

-

Measure agreement - Cohen's Kappa >= 0.8 before production

-

Invest in guidelines - Decision trees, examples, edge cases

-

Quality control is ongoing - Gold questions, redundancy, monitoring

-

Budget realistically - complexity * redundancy * domain expertise

Part 8: Lab Preview

What you'll build today

This Week's Lab

Hands-on Practice:

-

Install Label Studio - Set up local annotation server

-

Create annotation project - Configure for sentiment analysis

-

Write guidelines - Clear definitions and examples

-

Label data - Annotate 30 movie reviews

-

Calculate IAA - Measure Cohen's Kappa with a partner

-

Discuss disagreements - Refine guidelines based on edge cases

Lab Setup Preview

# Install Label Studio

pip install label-studio

# Start the server

label-studio start

# Access at http://localhost:8080

You'll create a sentiment analysis project and experience the full annotation workflow!

Next Week Preview

Week 4: Optimizing Labeling

- Active Learning - smart sampling to reduce labeling effort

- Weak Supervision - programmatic labeling with rules

- LLM-based labeling - using GPT/Claude as annotators

- Noisy label detection and handling

The techniques to make labeling 10x more efficient!

Resources

Tools:

- Label Studio: https://labelstud.io/

- CVAT: https://cvat.ai/

- Prodigy: https://prodi.gy/

Metrics:

- sklearn.metrics.cohen_kappa_score

- statsmodels fleiss_kappa

Reading:

- Annotation guidelines (Google, Amazon, Microsoft examples online)

Questions?

Thank You!

See you in the lab!

Appendix: Audio & Video Annotation

Reference slides — not covered in lecture

Audio: Transcription

Task: Convert speech to text with timestamps.

{

"audio": "interview.wav",

"transcription": [

{"start": 0.0, "end": 3.2, "speaker": "A", "text": "Hello, how are you?"},

{"start": 3.5, "end": 5.8, "speaker": "B", "text": "I'm doing well, thank you."}

]

}

Challenges: Speaker diarization, overlapping speech, accents, filler words

Audio: Sound Event Detection

Task: Identify and timestamp sound events.

{

"audio": "home_audio.wav",

"events": [

{"start": 2.3, "end": 3.1, "label": "door_slam"},

{"start": 5.0, "end": 8.2, "label": "dog_bark"},

{"start": 10.5, "end": 11.0, "label": "glass_break"}

]

}

Applications: Surveillance, healthcare monitoring, environmental sounds

Video: Object Tracking

Task: Follow the same object across multiple frames.

{

"video": "traffic.mp4",

"tracks": [

{

"track_id": 1, // Same ID across all frames!

"category": "car",

"bboxes": [

{"frame": 0, "bbox": [100, 200, 50, 80]},

{"frame": 1, "bbox": [105, 202, 50, 80]},

{"frame": 2, "bbox": [110, 204, 50, 80]}

]

}

]

}

Challenge: Re-identification after occlusion

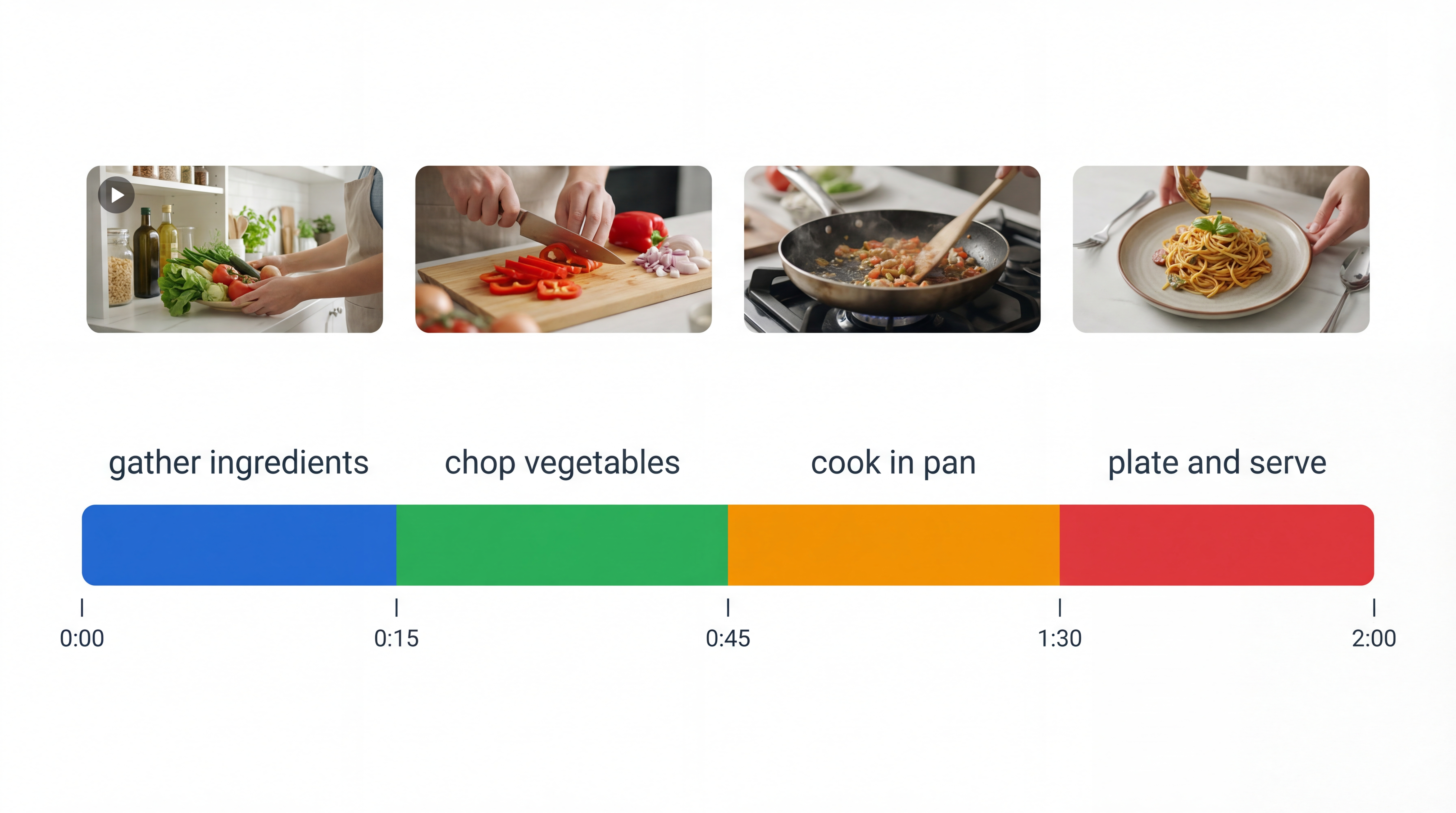

Video: Temporal Segmentation

Task: Divide video into meaningful segments.

| Segment | Start | End | Label |

|---|---|---|---|

| 1 | 0:00 | 0:15 | gather_ingredients |

| 2 | 0:15 | 0:45 | chop_vegetables |

| 3 | 0:45 | 1:30 | cook_in_pan |

Applications: Sports analysis, surgical videos, tutorials

Span F1 for NER

| Metric | Value |

|---|---|

| Exact Match | 2/3 = 0.67 (spans must match exactly) |

| Partial Match | 3/3 = 1.0 (overlapping spans count) |

Span F1 = Harmonic mean of Precision & Recall on entity spans

WER for Transcription

import jiwer

wer = jiwer.wer("The cat sat on the mat", "The cat sit on a mat")

print(f"WER: {wer:.1%}") # 33.3%

IoU: Python Implementation

def calculate_iou(box1, box2):

"""Calculate IoU between two boxes [x1, y1, x2, y2]."""

x1, y1 = max(box1[0], box2[0]), max(box1[1], box2[1])

x2, y2 = min(box1[2], box2[2]), min(box1[3], box2[3])

if x2 < x1 or y2 < y1:

return 0.0 # No overlap

intersection = (x2 - x1) * (y2 - y1)

area1 = (box1[2] - box1[0]) * (box1[3] - box1[1])

area2 = (box2[2] - box2[0]) * (box2[3] - box2[1])

return intersection / (area1 + area2 - intersection)