Step 3d: Solving with 3 LFs

Add LF₃: rating < 4 → NEG. Now we have 3 pairwise agreements:

| Pair | Agreement | Equation |

|---|---|---|

| LF₁, LF₂ | 85% | α₁α₂ + (1-α₁)(1-α₂) = 0.85 |

| LF₁, LF₃ | 80% | α₁α₃ + (1-α₁)(1-α₃) = 0.80 |

| LF₂, LF₃ | 90% | α₂α₃ + (1-α₂)(1-α₃) = 0.90 |

3 equations, 3 unknowns → Can solve!

Generalizing: N Labeling Functions, K Examples

With N labeling functions:

| N (# LFs) | Pairwise Equations | Unknowns (α's) | Status |

|---|---|---|---|

| 2 | 1 | 2 |  Underdetermined (can't solve) Underdetermined (can't solve) |

| 3 | 3 | 3 | ✓ Exactly determined |

| 4 | 6 | 4 | ✓ Overdetermined |

| 5 | 10 | 5 | ✓ Overdetermined |

| N | N(N-1)/2 | N | ✓ if N ≥ 3 |

Key insight: We need at least 3 LFs to solve for accuracies!

What Does "Overdetermined" Mean?

| Term | Equations vs Unknowns | Example | Reliability |

|---|---|---|---|

| Underdetermined | Fewer equations | 1 eq, 2 unknowns |  Infinite solutions Infinite solutions |

| Exactly determined | Equal | 3 eq, 3 unknowns |  One solution (fragile) One solution (fragile) |

| Overdetermined | More equations | 6 eq, 4 unknowns |  Most reliable! Most reliable! |

Why is overdetermined better?

- Real data has noise (agreement rates aren't perfectly measured)

- With exactly 3 equations: noise in any equation → wrong solution

- With 6+ equations: find best fit that satisfies all (least squares)

- Extra equations cross-check each other → robust to noise

Analogy: Asking 3 witnesses vs 10 witnesses — more witnesses = more reliable!

Effect of Dataset Size (K Examples)

| K (# examples) | Agreement Estimate Quality | Solution Reliability |

|---|---|---|

| 100 | Noisy (±5-10%) |  Rough estimates Rough estimates |

| 1,000 | Reasonable (±1-3%) | ✓ Good estimates |

| 10,000+ | Very accurate (±0.5%) |  Highly reliable Highly reliable |

Both matter for reliability:

- More LFs (N) → overdetermined system → robust to equation noise

- More examples (K) → accurate agreement rates → less noise to begin with

Step 3e: Solving with Gradient Descent (PyTorch)

import torch

# Observed agreement rates

obs = torch.tensor([0.85, 0.80, 0.90]) # LF pairs: (1,2), (1,3), (2,3)

# Initialize α's as learnable parameters

α = torch.tensor([0.7, 0.7, 0.7], requires_grad=True)

for i in range(100):

# Predicted agreement: αᵢαⱼ + (1-αᵢ)(1-αⱼ)

pred = torch.tensor([

α[0]*α[1] + (1-α[0])*(1-α[1]), # LF1, LF2

α[0]*α[2] + (1-α[0])*(1-α[2]), # LF1, LF3

α[1]*α[2] + (1-α[1])*(1-α[2]) # LF2, LF3

])

loss = ((pred - obs)**2).sum()

loss.backward(); α.data -= 0.1 * α.grad; α.grad.zero_()

α.data.clamp_(0.5, 1.0) # keep in valid range

Iter 10: α=[0.798, 0.899, 0.849], loss=0.0001

Iter 50: α=[0.800, 0.900, 0.850], loss=0.0000 ✓

lecture-demos/week04/solve_lf_accuracy_torch.ipynb

Step 4: Convert Accuracy → Voting Weight

Weight formula (log-odds of accuracy):

| LF | Accuracy (α) | Calculation | Weight (w) |

|---|---|---|---|

| LF₁ | 0.80 | log(0.8/0.2) = log(4) | 1.39 |

| LF₂ | 0.90 | log(0.9/0.1) = log(9) | 2.20 |

Intuition: Higher accuracy → higher weight → more influence in vote

Step 5: Compute Final Probabilities

Using learned weights: w₁ = 1.39 (α₁=0.80), w₂ = 2.20 (α₂=0.90)

Formula:

| # | Review Text | LF₁ Vote | LF₂ Vote | Score POS | Score NEG |

|---|---|---|---|---|---|

| 1 | "good movie" (8.0) | ✓ POS | ✓ POS | 1.39+2.20=3.59 | 0 |

| 2 | "good but boring" (5.0) | ✓ POS | — | 1.39 | 0 |

| 3 | "terrible" (2.0) | — | ✓ NEG | 0 | 2.20 |

| 4 | "decent film" (7.5) | — | ✓ POS | 2.20 | 0 |

Step 5: Full Calculation Table

| # | Score POS | Score NEG | e^(POS) | e^(NEG) | Sum | P(POS) |

|---|---|---|---|---|---|---|

| 1 | 3.59 | 0 | 36.2 | 1.0 | 37.2 | 97.3% |

| 2 | 1.39 | 0 | 4.0 | 1.0 | 5.0 | 80.0% |

| 3 | 0 | 2.20 | 1.0 | 9.0 | 10.0 | 10.0% |

| 4 | 2.20 | 0 | 9.0 | 1.0 | 10.0 | 90.0% |

Interpretation:

- Review 1: Both LFs agree → very confident (97%)

- Review 2: Only weaker LF₁ votes → moderately confident (80%)

- Review 3: Strong LF₂ says NEG → confident negative (10% POS)

- Review 4: Only strong LF₂ votes → confident (90%)

Step 5: Conflicting Votes Example

What if LFs disagree? The more accurate LF wins!

| # | Review | LF₁ (w=1.39) | LF₂ (w=2.20) | Score POS | Score NEG | P(POS) |

|---|---|---|---|---|---|---|

| 5 | "good acting, bad plot" | ✓ POS | ✓ NEG | 1.39 | 2.20 | 31% |

| 6 | "poor quality, great story" | ✓ NEG | ✓ POS | 2.20 | 1.39 | 69% |

Calculation for Review 5:

→ LF₂ has higher accuracy (α₂=0.90 > α₁=0.80), so its vote dominates!

Worked Example: Step 5 — Train Final Model

# Get probabilistic labels from Snorkel

probs = label_model.predict_proba(L_train) # e.g., [0.92, 0.35, 0.87, ...]

# Train any classifier on these soft labels

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(X_features, (probs[:, 1] > 0.5).astype(int))

Full pipeline summary:

- Write 10-20 labeling functions (heuristics)

- Apply LFs → get noisy vote matrix

- Train Label Model → learn LF reliabilities

- Get probabilistic labels → train your real model

demos/snorkel_weak_supervision.py

When to Use Weak Supervision

Good candidates:

- Patterns can be encoded as rules

- You have domain knowledge

- Labels have clear heuristics

- Data is too large for manual labeling

Bad candidates:

- Task requires human judgment (e.g., humor detection)

- No clear patterns or heuristics

- Very small dataset (just label it manually)

- High precision required (weak labels are noisy)

Part 4: LLM-Based Labeling

AI labeling your data

The LLM Labeling Revolution

2022-2024: Large Language Models became viable annotators.

# Before: Hire annotators

cost_per_label = 0.30 # USD

human_labels = 10000

total_cost = 3000 # USD

# Now: Use GPT-4 / Claude

cost_per_label = 0.002 # USD (roughly)

llm_labels = 10000

total_cost = 20 # USD

# 150x cost reduction!

But: Are LLM labels as good as human labels?

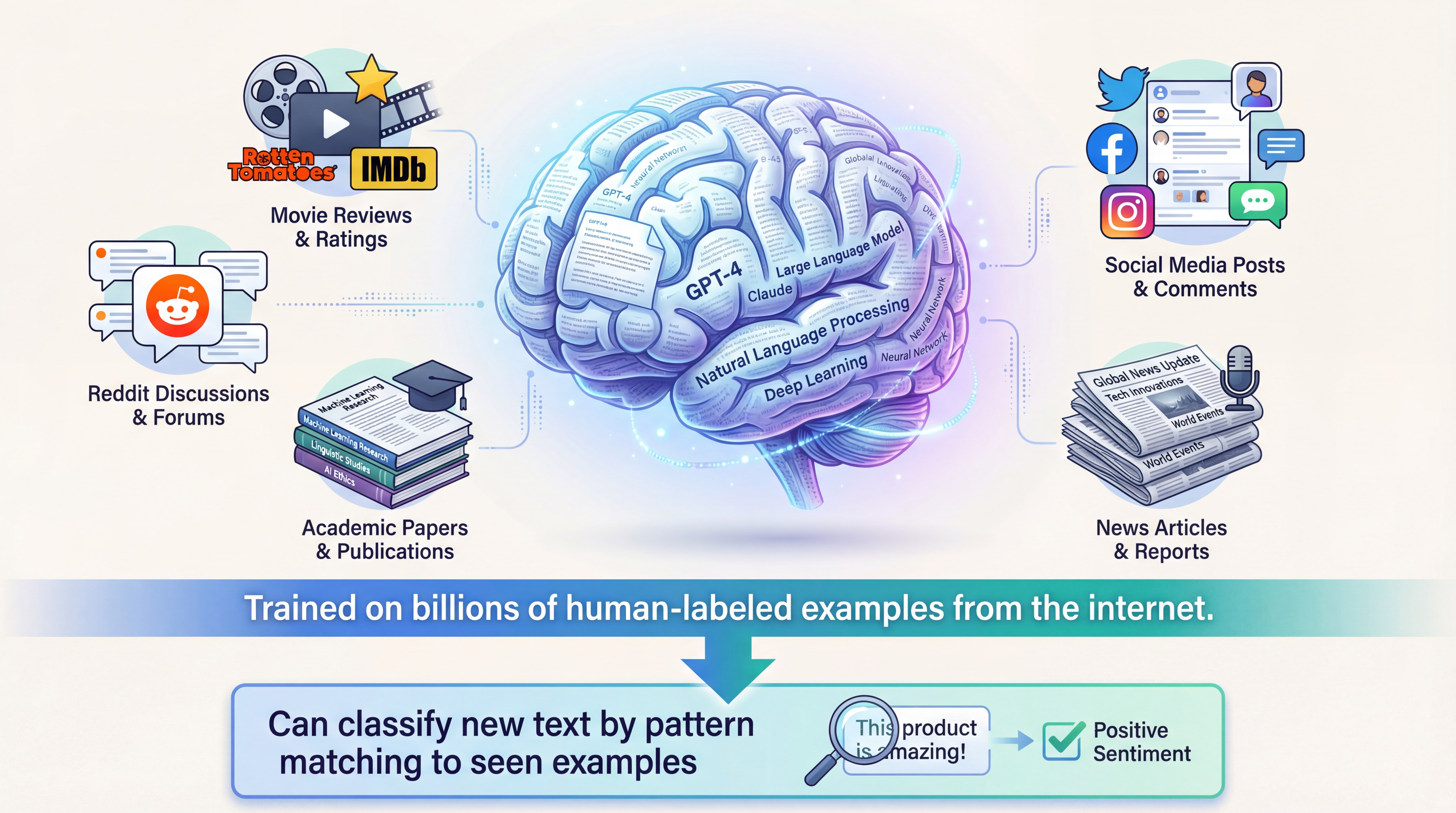

Why LLMs Can Label Data

LLMs = Crowdsourced human knowledge, distilled into a model

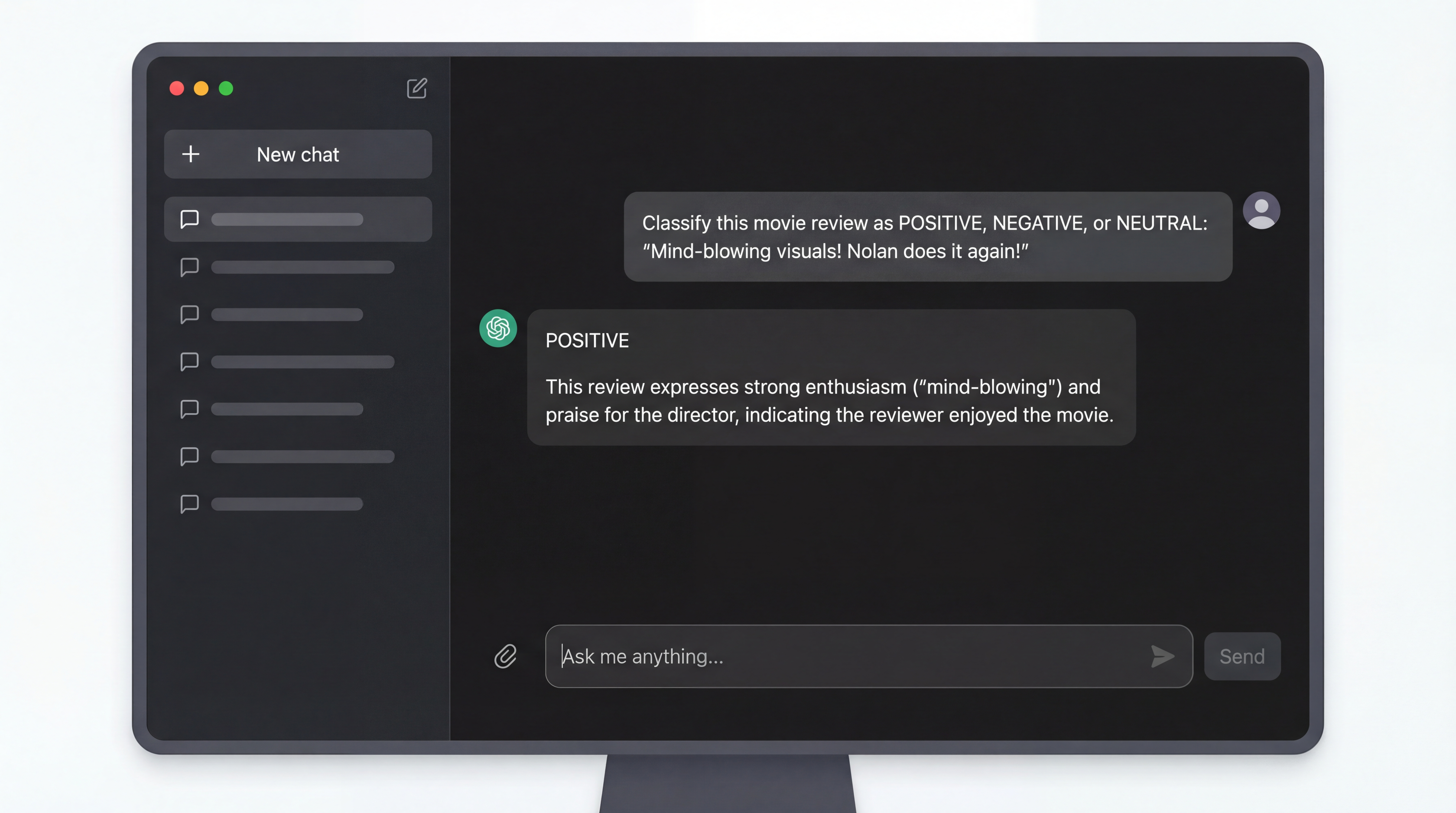

LLM Labeling: ChatGPT Interface

It's this simple! Just ask the LLM to classify text. But how do we scale this to 10,000 reviews?

LLM Labeling: System vs User Messages

| Role | Purpose | Example |

|---|---|---|

| System | Define the task, persona, output format | "You are a movie critic. Classify as POSITIVE/NEGATIVE/NEUTRAL" |

| User | The actual text to classify | "Review: 'Mind-blowing visuals!'" |

messages = [

{"role": "system", "content": "Classify movie reviews as POSITIVE/NEGATIVE/NEUTRAL"},

{"role": "user", "content": "Review: 'Mind-blowing visuals! Nolan does it again!'"}

]

# Response: "POSITIVE"

LLM Labeling: API for Scale

from openai import OpenAI

client = OpenAI()

def label_review(review):

response = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "Classify as POSITIVE/NEGATIVE/NEUTRAL. Reply with only the label."},

{"role": "user", "content": f"Review: {review}"}

],

max_tokens=10

)

return response.choices[0].message.content.strip()

# Label 1000 reviews

labels = [label_review(r) for r in reviews]

lecture-demos/week04/llm_labeling.py

Better Prompts = Better Labels

| Technique | Example | Benefit |

|---|---|---|

| Clear labels | "POSITIVE/NEGATIVE/NEUTRAL" | No ambiguity |

| Definitions | "POSITIVE: Reviewer enjoyed the movie" | Consistent criteria |

| Few-shot | Give 2-3 examples first | Much higher accuracy |

| JSON output | "Respond in JSON: {label, confidence}" | Easy parsing |

prompt = """Examples:

"Loved it!" → POSITIVE

"Terrible" → NEGATIVE

Now classify: "The movie was okay I guess"

Label:"""

Few-shot examples can improve accuracy by 10-20%!

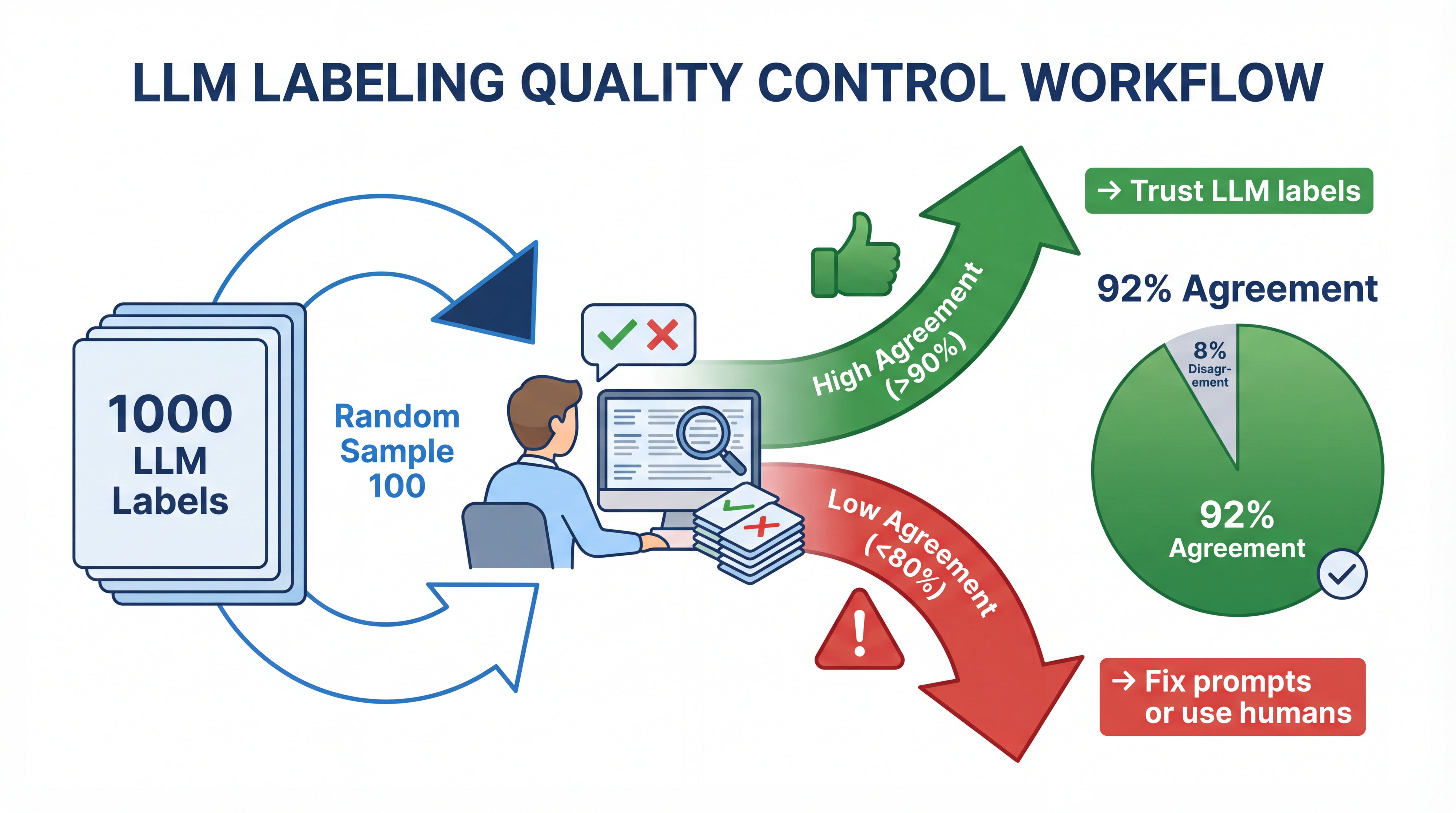

LLM Labeling Quality Control

Always validate! Sample 50-100 labels and check human-LLM agreement before trusting the full batch.

When LLMs Struggle

1. Subjective Tasks

"This movie is so bad it's good"

LLM: NEGATIVE (wrong - it's ironic praise!)

2. Domain-Specific Knowledge

"The mise-en-scene was pedestrian but the diegetic sound..."

LLM: ? (needs film theory knowledge)

3. Nuanced Categories

5-point scale: Very Negative, Negative, Neutral, Positive, Very Positive

LLM accuracy drops significantly with more categories

4. Ambiguous Guidelines

What exactly counts as "slightly negative"?

Hybrid Approach: LLM + Human

def hybrid_labeling(texts, confidence_threshold=0.8):

llm_labels = []

human_queue = []

for i, text in enumerate(texts):

label, confidence = label_with_confidence(text)

if confidence >= confidence_threshold:

llm_labels.append((i, label, "llm"))

else:

human_queue.append(i)

print(f"LLM labeled: {len(llm_labels)}")

print(f"Need human: {len(human_queue)}")

# Send human_queue to annotation platform

return llm_labels, human_queue

Use LLMs for easy examples, humans for hard ones!

LLM Labeling: Cost Comparison

| Method | Cost/1000 labels | INR/1000 | Quality | Speed |

|---|---|---|---|---|

| Expert humans | $300-500 | ₹25,000-42,000 | Highest | Slow |

| Crowdsourcing | $50-100 | ₹4,200-8,400 | Medium | Medium |

| GPT-4 | $20-50 | ₹1,700-4,200 | Good | Fast |

| GPT-3.5 | $2-5 | ₹170-420 | Moderate | Very Fast |

| Claude Haiku | $1-3 | ₹85-250 | Moderate | Very Fast |

| Open source LLM | ~$0 | ~₹0 (compute) | Varies | Depends |

Sweet spot: GPT-3.5/Claude Haiku for first pass, humans for validation

Part 5: Handling Noisy Labels

Garbage in, garbage out?

Sources of Label Noise

| Source | Example | How common |

|---|---|---|

| Annotator error | Tired annotator clicks wrong button | 5-15% |

| Task ambiguity | "The movie was okay" - POS or NEG? | 10-20% |

| Weak supervision | Heuristic "good" → POS catches "not good" | 15-30% |

| Data entry errors | Columns swapped, typos | 1-5% |

Real-world datasets often have 5-20% label noise!

Detecting Label Errors with Cleanlab

Idea: Train model → find where model strongly disagrees with label

| Review | Given Label | Model says | Suspicious? |

|---|---|---|---|

| "Loved it!" | POS | 95% POS |  No No |

| "Not good at all" | POS | 92% NEG |  Yes! Yes! |

| "Meh, it was fine" | NEG | 60% NEG |  No No |

from cleanlab import Datalab

lab = Datalab(data={"X": X, "y": y}, label_name="y")

lab.find_issues(pred_probs=model.predict_proba(X))

mislabeled = lab.get_issues()[lab.get_issues()['is_label_issue']].index

lecture-demos/week04/cleanlab_noisy_labels.ipynb

What to Do With Noisy Labels?

| Strategy | When to use | Code |

|---|---|---|

| Remove | Few errors, enough data | X_clean = X[~mislabeled] |

| Re-label | Important examples | Send back to humans |

| Label smoothing | Many errors | y = [0.9, 0.05, 0.05] instead of [1,0,0] |

Rule of thumb:

- <5% noise → probably fine, ignore it

- 5-15% noise → use cleanlab to remove/fix

- >15% noise → fix your labeling process!

Part 6: Combining Approaches

The best of all worlds

Decision Tree: Which Technique?

| Data Size | First Choice | Add if... |

|---|---|---|

| <1,000 | Manual labeling | — |

| 1k-10k | Active Learning | + Weak supervision if patterns exist |

| >10k | Weak Supervision or LLM | + Active Learning for hard cases |

Quick decision guide:

| Question | Yes → | No → |

|---|---|---|

| Can you write labeling heuristics? | Weak Supervision | LLM Labeling |

| Do you have budget for LLM API? | LLM Labeling | Weak Supervision |

| Is high precision critical? | Active Learning + humans | LLM or Weak Supervision |

Cost-Benefit Analysis

| Approach | Setup Cost | Per-Label Cost | Quality |

|---|---|---|---|

| Manual only | Low | $0.30 | High |

| + Active Learning | Medium | $0.30 (fewer) | High |

| + Weak Supervision | High | ~$0 | Medium |

| + LLM Labeling | Low | $0.002 | Medium-High |

| + Noise Cleaning | Medium | ~$0 | Improved |

Typical savings: 5-20x cost reduction with hybrid approach

Part 7: Key Takeaways

Key Takeaways

-

Active Learning - Let model pick what to label (2-10x savings)

-

Weak Supervision - Write labeling functions (10-100x savings)

-

LLM Labeling - Use GPT/Claude as annotators (10-50x cost reduction)

-

Noisy Labels - Detect and handle with cleanlab

-

Combine approaches - Hybrid pipelines give best results

-

Quality matters - Validate with human spot-checks

-

Tools exist - modAL, Snorkel, cleanlab, OpenAI API

Part 8: Lab Preview

What you'll build today

This Week's Lab

Hands-on Practice:

-

Active Learning from scratch

- Implement uncertainty sampling

- Compare to random sampling

- Visualize learning curves

-

Weak Supervision with Snorkel

- Write labeling functions

- Train label model

- Analyze LF quality

-

LLM Labeling

- Prompt engineering for annotation

- Compare GPT-3.5 vs GPT-4 quality

- Calculate cost savings

Lab Setup Preview

# Install required packages

pip install snorkel

pip install cleanlab

pip install openai scikit-learn

# Verify installations

python -c "import snorkel; print('Snorkel OK')"

python -c "import cleanlab; print('cleanlab OK')"

python -c "import sklearn; print('sklearn OK')"

You'll implement a complete labeling optimization pipeline!

Next Week Preview

Week 5: Data Augmentation

- Why augmentation improves models

- Text augmentation techniques

- Image augmentation with Albumentations

- Audio and video augmentation

- When (not) to augment

More data from existing data - without labeling!

Resources

Libraries:

- modAL: https://modal-python.readthedocs.io/

- Snorkel: https://snorkel.ai/

- cleanlab: https://cleanlab.ai/

- OpenAI API: https://platform.openai.com/

Papers:

- "Data Programming" (Snorkel paper)

- "Confident Learning" (cleanlab paper)

Reading:

- Snorkel tutorials: https://www.snorkel.org/use-cases/

Questions?

Thank You!

See you in the lab!