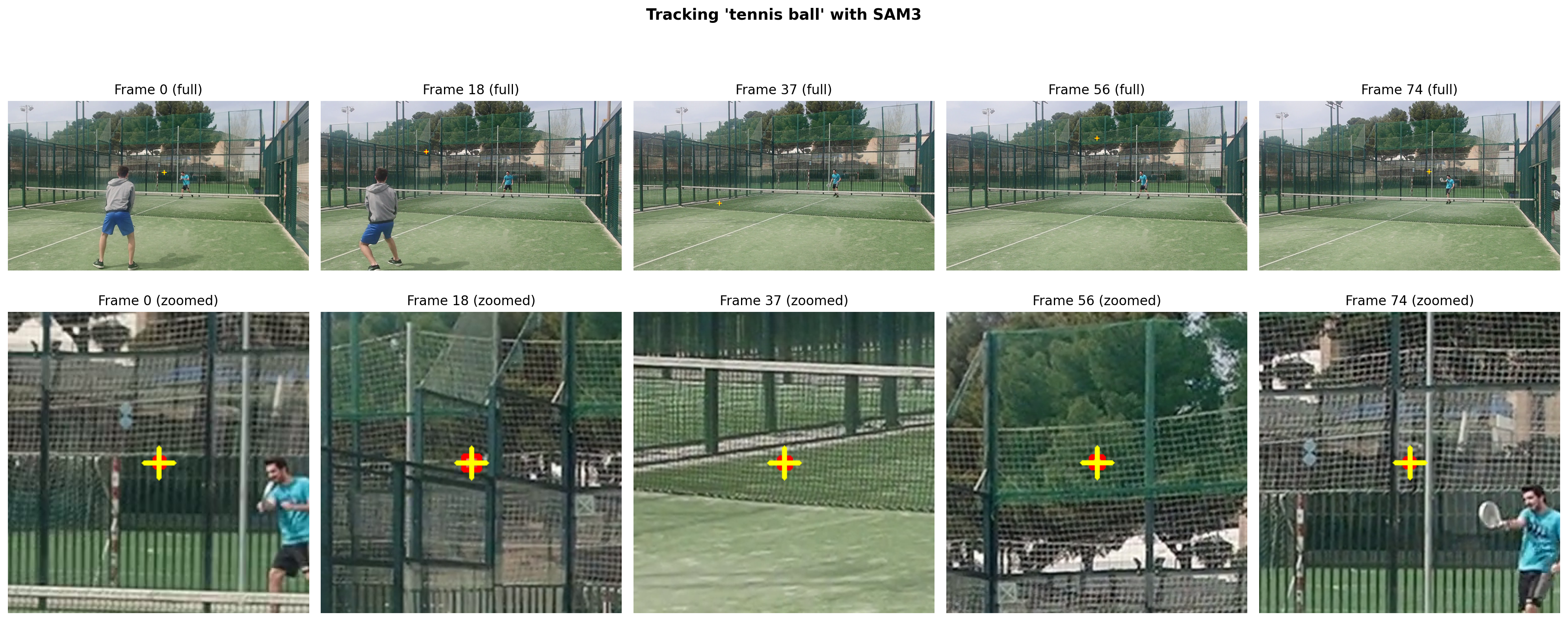

# Show tracking on key frames with ZOOMED IN views around the ball

key_frames = [0, len(video_frames)//4, len(video_frames)//2, 3*len(video_frames)//4, len(video_frames)-1]

key_frames = [f for f in key_frames if f in outputs_per_frame]

fig, axes = plt.subplots(2, len(key_frames), figsize=(4*len(key_frames), 8))

for i, frame_idx in enumerate(key_frames):

frame = video_frames[frame_idx].copy()

output = outputs_per_frame.get(frame_idx, {})

h, w = frame.shape[:2]

cx, cy = w // 2, h // 2 # default center

if 'out_obj_ids' in output and len(output['out_obj_ids']) > 0:

probs = output['out_probs']

masks = output['out_binary_masks']

boxes_xywh = output['out_boxes_xywh']

# Select only the best object (highest score)

best_idx = np.argmax(probs)

best_mask = masks[best_idx]

best_box_xywh = boxes_xywh[best_idx]

# Get center of the ball for zooming

x, y, bw, bh = best_box_xywh

cx = int((x + bw/2) * w)

cy = int((y + bh/2) * h)

# Draw on frame - use cv2 for more control

# Draw filled mask

mask_overlay = frame.copy()

mask_overlay[best_mask] = [255, 0, 0] # Red

frame = cv2.addWeighted(frame, 0.5, mask_overlay, 0.5, 0)

# Draw bounding box

x1, y1 = int(x * w), int(y * h)

x2, y2 = int((x + bw) * w), int((y + bh) * h)

cv2.rectangle(frame, (x1, y1), (x2, y2), (255, 0, 0), 3)

# Draw crosshair at center

cv2.drawMarker(frame, (cx, cy), (255, 255, 0), cv2.MARKER_CROSS, 30, 3)

# Top row: full frame

axes[0, i].imshow(frame)

axes[0, i].set_title(f"Frame {frame_idx} (full)")

axes[0, i].axis('off')

# Bottom row: zoomed in (200x200 crop around the ball)

zoom_size = 150

x1_crop = max(0, cx - zoom_size)

x2_crop = min(w, cx + zoom_size)

y1_crop = max(0, cy - zoom_size)

y2_crop = min(h, cy + zoom_size)

zoomed = frame[y1_crop:y2_crop, x1_crop:x2_crop]

axes[1, i].imshow(zoomed)

axes[1, i].set_title(f"Frame {frame_idx} (zoomed)")

axes[1, i].axis('off')

plt.suptitle("Tracking 'tennis ball' with SAM3", fontweight='bold', fontsize=14)

plt.tight_layout()

plt.show()